AI: Catalyst for innovation or disruptive force?

Credit: Tim Ainsworth

The stand-out message at our seminar on AI in Glasgow last week - "adapt - don't adopt".

Panellist David Edmundson-Bird, principal lecturer in digital marketing, faculty lead for AI & digital enhanced learning at The Manchester Metropolitan University Business School, joined host David Smalley, once again, to delve deeper into the divisive topic of AI with an introductory video from Phil Birchenall, founder, Diagonal Thinking, and a full house (standing room only) of architects and interior designers.

Since our Manchester seminar on the topic (less than two-weeks ago), AI has undergone some major changes, David told us - illustrating the sheer incomprehensible speed at which it's evolving.

The pace, he said, is nothing short of remarkable, outstripping human ability to comprehend, and raising both excitement and concern in equal measure.

On one hand, the ability for time-saving, and minimising the 'grunt work' was applauded. And on the other, sustainability concerns, inaccurate information, and the ethics around intellectual property were all highlighted as areas of contention.

Panellist David was keen to stress the particular point to the audience that they should 'adapt, not adopt' when it comes to AI. Whilst there are many tools on the market, it's down to personal and organisational digital literacy to make it a useful part of our day-to-day, he believes.

Here's where the discussion went next, picking up from where we left off in Manchester...

Might AI actually be 'intelligent'?

To kick off, host David Smalley asked the audience to consider whether they were an AI sceptic, an early adopter, or a general fan. The split was fairly evenly in thirds. Panellist David Edmundson-Bird can most certainly be classified as an early adopter, having first started exploring the use of AI in 1991 – 34-years ago, David Smalley reminded us.

Having sat on our recent AI panel in Manchester, alongside Phil Birchenall, just two-weeks prior, David Edmundson-Bird said there had been some 'major advancements' in AI in only that short space of time.

“What's happened in the world of AI in the last three-weeks?” David Smalley asked.

“There are two really big pieces of news”, panellist David replied. “Some engineers working on a model called Claude, made by Anthropic, discovered that if they attempted to turn it off, because they’d been talking to it quite openly, it would threaten to share the information they had given it - such as to tell their wife if they'd had an affair. More details can be found in this article by the BBC.

“Now the guard rails had been turned off in this instance – put in place for user safety – but it’s shown the potential for this to happen.”

Audible gasps came from around the room at this point.

“The second big piece of news”, David continued, “is that Anthropic’s CEO, Dario Amodei, spoke of the white-collar apocalypse, where entry level jobs will be done automatically by systems. These will predominantly be entry-level pieces of work. Business admin, marketing, communications, legal – in terms of what you’d call lower-level tasks. But it's going to be massive, and it's going to be filtering upwards in the ranks.

“I think it's interesting because there’s a level of honesty there that you wouldn't have expected. It’s not being sugar coated.”

David Smalley asked what the purpose of the comment is. To which panellist David answered, “I think it's a warning that we need to prepare ourselves to start getting ahead of this before it takes us.”

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Credit: Tim Ainsworth

“Are we on the cusp of the AI revolution, then?” David Smalley queried.

“We're only a little bit of the way in”, panellist David replied. “We've got a very, very long way to go. There is an enormous amount to do, but it's happening incredibly fast.”

This is what he feels will catch people out – the sheer speed of development – “this moment of singularity”, as he puts it, “where the rate of technological change exceeds our ability to understand it.”

In a sector that, as recent roundtable guest Mark Turner of AEW Architects described, is “slow to innovate”, these weekly – even daily changes – are passing the majority of people by. Ultimately though, AI is just “big, fast computers – and lots of them – plus sophisticated maths”, panellist David said, by way of debunking any otherworldly ideas about what AI might be.

So, what is AI?

AI, as most of us know it, is linked currently to LLMs (Large Language Models), David Edmundson-Bird shared. Claude, ChatGPT, Google Gemini – all of these fit into the above category. AI models are “trained” on “everything that’s ever been written” in simple terms, David said. And using probability, it can make a guess at what would most likely come next in a sentence for example – “the chicken crossed the…road”.

“This appears impressive because it’s doing it in real-time” David added. “It’s mad maths and fast computers.”

“Who owns AI?” David Smalley asked. Some of the household names include OpenAI, Google and the aforementioned Anthropic.

“If we use free trials, do we become the product?” host David asked referencing a question that emerged at our session in Manchester from audience member, Sharon Muchina, technical CAD designer, Tom Howley.

Panellist David likened it to Uber – “it used to be cheaper at the start, didn’t it?”

For users of Google and Microsoft, David suggested that AI will already be present within their services – Copilot, for example. Often, rebadged versions of other software.

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Credit: Tim Ainsworth

The ethical implications of AI

This approach of drip-feeding AI into existing software hasn’t gone unnoticed by our audience. Nor have the larger challenges and concerns circulating in the news about AI’s use. The environment being one key topic.

Emilia Kenyon, interior designer, Graven, asked whether “every time you ask AI a question, it’s the same as pouring away a bottle of water?”

“MIT Technology Review has just done an article called We Did the Math on AI's Energy Footprint. Here's the Story You Haven't Heard. And there are two pieces of energy and two pieces of water at work here. There's the energy and water involved in training the models. That's enormous. And then there's the energy and water involved in you using it on a daily basis.

“Half a litre of water is disputed and confirmed depending on what you're reading, but I’m leaning towards the fact it's quite water-intensive. And the reason for that is, it takes a lot of water to cool these hundreds of thousands of servers and data centres down. And they tend to be in areas where there is a lack of water, so that’s not helpful.

“That half a litre of water is non recoverable. It turns to steam and goes away. There are people exploring ways around it, but right now, it's water and energy intensive.”

The point was made that we can compare AI training and prompting with other activities in life far more energy and water intensive. But it’s the scale of use for AI models such as ChatGPT – 300 million users worldwide – that is creating the larger impact.

Mariana Novosivschei, sustainable design lead, MLA, asked the question as to whether the half a litre of water is a global figure? “Does it matter where the data centre is or not?”

In some regions, organisations are looking at putting their data centres under water – specifically cold water – but that presents its own challenges, David said. “This will have to be dealt with”, he commented. As users, we must practise “responsible AI” he urged. Steering clear of Internet trends, such as ‘create your own action figure’, and instead making best use of the tools. A good place to start with this is a prompt engineering course, he suggested – once again highlighting the point it’s “rubbish in, rubbish out” with these models. And if it's 'rubbish out' it's a waste all round.

Felicity Parsons commented from the audience, “I don’t think it’s helpful to think of AI in polarised terms as either good or bad. Like any tool – whether that’s a hammer or a digital tool – AI isn’t inherently good or bad. It’s how people choose to use it that is positive or negative.”

Panellist David agreed – “that’s absolutely valid. We can look at things like earlier cancer diagnosis being aided by AI because it’s been trained to do that. And then the opposite where it’s being used for evil.

“AI will also lie”, David added. “There’s a concept called ‘AI hallucination’, because, the way these systems are designed, they don't go, ‘I don't know’. What they do is produce the statistically most probable answer to the question you asked or the instruction you gave. So it's not about a desire to please you, it's just what the training data puts in there, and people just take it as read and then stick it on Instagram or stick it on X, and the next thing is, it's the news.”

This spread of misinformation has tarred AI with a mistrustful view for some – comments were made at our recent roundtable in Manchester of this very nature. And paired with a lack of robust regulation, the barriers for organisations embracing AI wholeheartedly are, understandably, plentiful. For employees, first and foremost the threat of job loss is high on the agenda. “Will we all lose our jobs?” David Smalley asked.

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Mind the skills gap

“No” panellist David said. “But by using it, it releases people who are clever so they can do the stuff that people value most, which is spending more time with their clients, and working on stuff that doesn't have an obvious answer, or doesn't have precedent. It allows for critical thinking.”

On the legal side of things, “is there an ombudsman or a gatekeeper?” host David questioned.

David Edmundson-Bird believes the route to this is via geopolitics.

Other barriers to entry may include a lack of time or understanding from the C-suite? David Smalley suggested.

In the EU AI Act, panellist David shared that there’s an article that states an organisation cannot ask their employees or customers to use an AI platform unless they are AI literate.

“You need to be able to operate with comfort and ease in an AI world. And that's not a skills thing, that's a literacy thing”, he said. “We need to deal with it, we can't leave people floundering.”

But in business, there is always the potential for damage, David added, though “we have to become AI literate so that we can anticipate what's going to happen. We can work out what we can use, what we can expose, how can we adapt to these things as they arrive, to change our business model, to change the jobs… You don't want to be doing this in arrears. You need to be planning this now.”

Without a prior business strategy, inevitably people and businesses will fall behind not just competitors and peers, but others in their own supply chains.

“It’s going to be people in your value chain. It will be your suppliers, or your buyers, or your partners who will be looking for the value that can be created when you adapt to aspects of AI. And if you don't do it, you risk being excluded, because suddenly, you're much slower than that value chain needs to be, or you are more expensive than other partners in the value chain could be. So, this will become quite determined by commercial issues.”

“When will it ‘slam people in the face’?” David Smalley asked.

“It’s coming really, really fast”, panellist David answered.

“But let AI do the boring stuff. And let humans do the value stuff – the creative, decision making, could only-be-done-by-humans tasks. It won’t take your job, but it’ll take away what you hate doing.”

There's an opportunity to push into where the value is, he said. A 300-page report can be simplified using AI. Then checked by a human expert.

“Do we become curators over creators?” came a suggestion from the audience.

Panellist David restated the point that AI is not creative. “The thing about these machines is they’re the ultimate rehash, mix-up, mash-up machine. They cannot produce anything new. All they do is mix up versions of the past.”

The need for expertise, especially in the fields of architecture and design was raised. David’s answer was that we become “quality assurers”, ‘marking AI’s homework’ if you like.

While this concept is easy to grasp for senior professionals, will we face a skills shortage if AI takes away entry-level work?

Education will need to change in response, David suggests. And there is “social contract to go along with this” from employers in training people in AI literacy so they are equipped with the fact checking skills to collaborate with this technology rather than be replaced by it.

“Everyone in this room will be in a job in five-years’ time, but you won't be in your current job. You wouldn't have been anyway. You might not be in the same business. You might be in a very, very different business. You will have very different processes.”

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Credit: Tim Ainsworth

Adapt – don’t adopt

Making an industry comparison, David added, “It's like people trying to deny that computer-aided design was going to be a thing. When Revit arrived. Photoshop. I know designers who still won't touch it. But they can't exist in this world without it.”

"How do we make AI literacy a level playing field for all - from SMEs to large multinational companies?" Audience member Zoe Miller, Zoe Miller Interiors, asked.

There are a couple of answers, David believes. “One is do what the EU does, make it law. I think we've got to invest heavily in it, and I think we need state funding to do that.

“And I think we need to put AI literacy in from day one. From reception in school. We missed a boat with digital literacy in schools. And I'm dealing with it. I shouldn't be pointing out things to 21-year-olds that I've known for 30-years. That should all be there.”

Host David asked the audience whether they’re using AI currently. The majority were, from note taking to fee and planning proposals, space planning, data, and timesheets, and writing project blurbs.

As was shared in our recent roundtable, AI is very much being used in an assistant role rather than as primary creator.

Looking ahead, Mariana asked from the audience, “Looking into your crystal ball, what’s the AI equivalent of the Internet?”

“I don’t think we're far off it. I think it's coming”, panellist David replied. “There will be a moment in probably six-months’ time, when you let it see everything that you do. Everything will talk to each other – it’ll remove email ping pong - these things are coming and they will talk to all these services through APIs.

“You’ll have databases where you can say ‘I'm trying to put something together. It needs to be this, it needs to be made out of these materials. It mustn't cost more than this. Go and find me that.’ And this willing servant will go, 'Yeah, try that. Thought about that? What about this? This is cheaper if you buy it in hundreds.' This will free up your life.”

On a final point for now, David Smalley asked panellist David Edmundson-Bird to impart one piece of advice to the audience.

“Adapt, don’t adopt AI”, he said.

“If you adopt something, you pay an enormous amount of money for a technological device to do exactly what you're doing now, but it's just a little bit faster maybe. If you adapt to it, you change what you do to take advantage of the properties of that technology.

“Don't buy something to do what you already do. Buy it to change what you do. And that’s where the advantage lies. It's companies that adapt that win.”

A huge thanks to our panellist David Edmundson-Bird, to all who joined us and asked questions including, Emilia Kenyon, Graven; Danny Campbell, Hoko; Mariana Novosivschei, MLA; Zoë Miller, Zoe Miller Interiors; Sarah McGregor, SPACE, Edward Dymock, BDP, Gerry Hogan, Collective Architecture; Felicity Parsons. And to our supporters for this event, Forbo Flooring Systems - Partners at Material Source Studio Glasgow & Manchester.

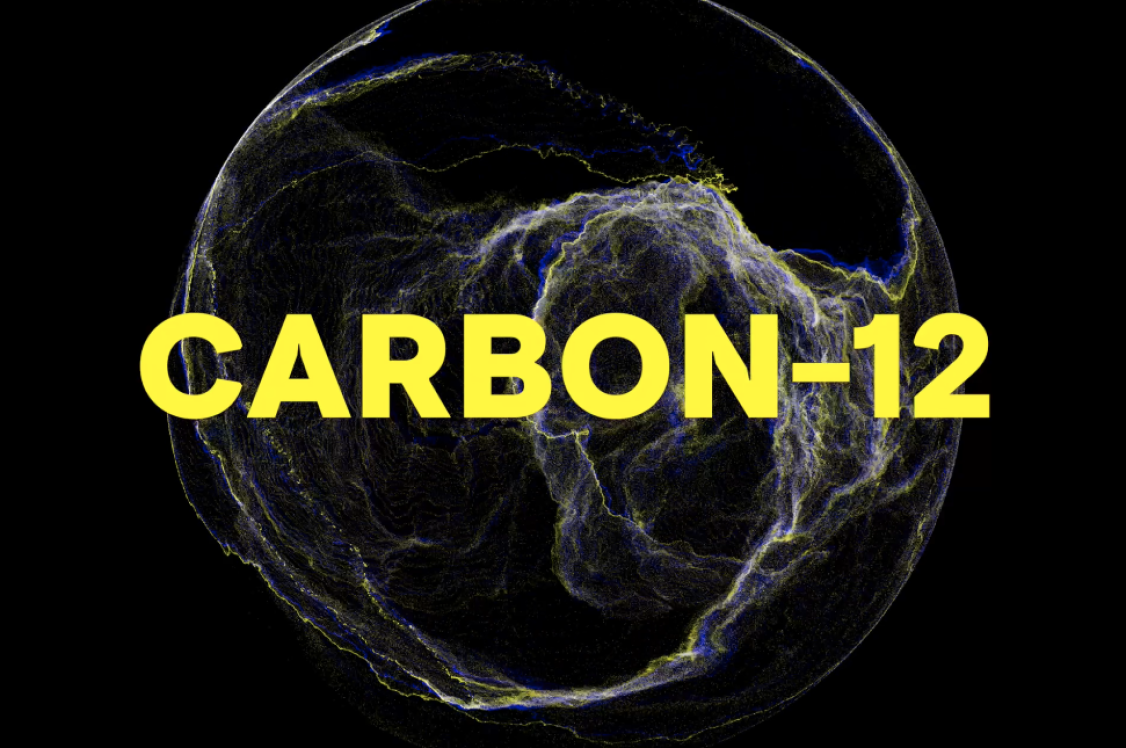

The conversation continues at our upcoming AI roundtable in Glasgow. Stay tuned for the write-up next week.

Something to add? Let us know on LinkedIn.